Cheat Sheet: Microsoft Azure Blob Storage

Learn Microsoft Azure Blob Storage with Zuar's handy cheat sheet. Tiers, containers, creation, deletion, leasing and more.

For the tutorials, we will mainly be using Azure Storage Explorer and the Azure Python SDK.

What is Blob Storage?

Azure Blob (binary large object) Storage is Microsoft's cloud object storage solution. An ‘object’ describes images, text files, audio files, file backups, logs, etc. Azure Blob Storage is optimized for storing very large volumes of unstructured data that isn't constrained to a specific model or schema.

Blob Storage is highly convenient and customizable to your needs. Users have many options to store and retrieve data from an instance of Azure Blob Storage.

Microsoft maintains client libraries for .Net, Java, Node.js, Python, Go, PHP, and Ruby. Additionally, you can also access objects using HTTP/HTTPS.

Another selling point is that you also have security built-in through Microsoft's platform, as well as the additional benefit of high availability and disaster recovery.

What is the structure of Blob?

Blob Storage is relatively intuitive and similar to a traditional file structure: directory>subdirectory>file schema. Each Azure Blob Storage account can contain an unlimited number of containers, and each container can contain an unlimited number of blobs.

People often think of the Azure Blob Storage container as the directory in the above example, and try to create folders within the containers to replicate a traditional structure, producing a virtual file structure.

However, it is better to think of the container as the subdirectory from above and the account itself as the directory. To avoid unnecessary complications, try to make the files as flat as possible without virtual subdirectories.

What are the types of blobs?

- Block Blobs: Optimized for large 'blocks' of data and identified by a single block ID, a block blob can hold 50k blocks.

- Page Blobs: Collections of 512-byte pages optimized for read/write operations.

- Append Blobs: Composed of block blobs optimized for append operations, new blocks are added to the end of the blob. Updating/deleting are not supported.

What are the storage tiers?

Azure also provides the option to customize storage options based on the purpose of the storage through different tiers. Currently, Microsoft offers three different storage tiers: Hot, Cool, and Archive.

Hot Access Tier

This tier is used for storing objects that are actively and consistently read from and written to. The access costs for this tier are the lowest among the tiers, however, the storage costs are the highest.

Generally, once frequent access to the data is no longer necessary, the data should be migrated to a different tier.

Cool Access Tier

Conversely, the cool access tier has higher access costs and lower storage costs than the hot access tier. This is meant for data that is no longer frequently used but users need immediate access should the need arise. Data in this tier should be expected to remain for at least 30 days.

Archive Access Tier

This tier has the cheapest storage cost and the highest access cost of the tiers. Microsoft expects that data placed in this tier will persist for at least 180 days and the account will incur an early deletion charge if the data is removed prior to 180 days.

Data in this tier is not immediately available: Microsoft takes these objects offline and you will not be able to read or modify them. In order to access objects in this tier, you must first 'rehydrate' them, which is basically Microsoft's terminology for moving the object back to an online tier such as cool or hot access.

Here is a quick graph to help picture how the access tiers are utilized:

The Y axis is how often the data needs to be accessed, while the X axis represents the length of time the data needs to be retained. The 'Hot' access tier is accessed often but is not required to be static very long, while the 'Cool' tier is not expected to be accessed often but is expected to remain available for 30-180 days, and finally the 'Archive' tier is not expected to be accessed outside of an anomaly.

How do I create a storage account?

To create a storage account, you will have to log into your Azure account and navigate to 'Home’ > ‘Storage accounts'.

From here, you will fill out all of the necessary information for the new account. If you already have a storage account, you can skip this step.

Please note that once you have created the resource, you can see what the default access tier is for the account in the properties. This can be changed based on your needs, but be aware of what new objects will default to.

How do I create a container?

Once you have a storage account, you will then be able to create a container.

Storage Explorer

Simply navigate to the subscription and storage account then right-click 'Blob Containers' and select 'Create Blob Container' and name it.

You should now see an empty container.

Python

To connect to the storage account via Python, there are a couple of things that you need to take care of first. The first thing you need is the connection string for the resource.

You can find this by logging into the Azure portal and navigating to the storage account then selecting the 'Security + networking' section.

Setting the Environment Variable

Once you have the connection string, Microsoft recommends setting this as an environment variable to avoid hard-coding it into any script. You can do this through the command line or by using the tool that is built-into windows (type 'env' in the start menu search bar to find it).

GUI

Command Line

Once you have added the connection string as an environment variable, you can start with the code to add a container.

If you have not installed the client library, please be sure to do so. If you have any questions regarding any of the Python code snippets, the SDK documentation is a great resource.

import os

from azure.storage.blob import BlobServiceClient

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Create a container

container_client = blob_service_client.create_container('pytesting')The code steps are:

Import dependencies > Store connection string environment variable as script variable > Initialize BlobServiceClient object > Create container using BlobServiceClient.create_container(<container_name>) method

Following the execution of this code, you should be able to refresh the 'containers' section in the storage explorer to see the new container.

How do I create a blob?

Storage Explorer

Uploading a blob using the storage explorer is fairly straightforward: Open the container, select 'Upload', select the file to upload, and press 'Upload'.

One thing to note here is that you are given the option to choose the Access Tier and the Blob Type. The Access Tier determines the access vs storage costs of the blob while the Blob Type determines how the blob is optimized.

In practice, you will want to ensure that these options suit your purpose, however here I will leave it as defaulted.

Once the transfer is complete, your file is now stored as a blob.

Python

import os

from azure.storage.blob import BlobServiceClient

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Initialize a blob client

blob_client = blob_service_client.get_blob_client(

container='pytesting', # container to write to

blob='anchor.jpg' # name of blob

)

# Write file to blob

with open('./data/anchor.jpg','rb') as f:

blob_client.upload_blob(f)The code steps are:

Import dependencies > Store connection string environment variable as script variable > Initialize BlobClient object > Upload blob using BlobClient.upload_blob(<binary_file_object>) method

You can see the results in the storage explorer for this as well.

How do I change a blob's access tier?

Once a blob has been created and you find that your retention/access needs have changed for the data, you can update the blob's access tier to match these requirements and make more efficient use of your resources.

One of the easiest ways to accomplish this is through the Storage Explorer. In Storage Explorer, navigate to the blob you want to update and right-click then select 'Change Access Tier' from the menu.

How do I download a blob?

Storage Explorer

In storage explorer, this is generally a trivial process. The interface provides a clear download option that will prompt you for a path. Additionally, you can double-click the file to open it within temporary local storage.

Python

In Python, it might be useful to first see what blobs are contained within the container before downloading. The following code will print out each blob within the container.

import os

from azure.storage.blob import BlobServiceClient

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Initialize a container from its name

container_client = blob_service_client.get_container_client('pytesting')

# Print the blob names in the container

blob_list = container_client.list_blobs()

for blob in blob_list:

print(blob.name)To download one of these blobs by name, you can use the below snippet.

# Download a blob to local

with open('./data/from_blob_anchor.jpg','wb') as f:

f.write(container_client.download_blob('anchor.jpg').readall())Here we can see the resulting download with the original image.

HTTP Request

You can also retrieve a blob using an HTTPS/HTTP request. One way to find the URL of the blob is by using the Azure portal by going to Home > Storage Account > Container > Blob > Properties.

However, probably the easiest way is to find the blob in the Storage Explorer, right-click, then select 'Copy URL'. This will copy the resource URL directly to the clipboard.

To download the blob, you would navigate to the copied URL and your browser will initiate the download. First however, you will need to set up authentication for the resource, or set up public access.

For this example, I'll set up public access to the container. To accomplish this, simply right-click the container in the storage explorer and select 'Set Public Access Level...' and fill out the form.

Storage Explorer Copy URL

Public Access

How do I delete a blob?

Storage Explorer

To delete a blob in Storage Explorer, simply right-click the target blob and select 'delete'.

Python

import os

from azure.storage.blob import BlobServiceClient

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Initialize a container from its name

container_client = blob_service_client.get_container_client('pytesting')

# Delete blob by name

container_client.delete_blob('anchor.jpg')

How do I delete a container?

Storage Explorer

Deleting a container through Storage Explorer is almost identical to deleting a blob, except here you would right-click the container object itself and then select ‘delete’.

Python

Deleting a container with Python is also straightforward. Once you initialize the container client object, you can call its 'delete_container' method.

# Initialize a container from its name

container_client = blob_service_client.get_container_client('pytesting')

# Delete container

container_client.delete_container()Following these deletions, the only container that remains is the default $logs container.

How do I soft-delete a blob?

There may be cases where there is a chance you will need to restore a deleted object. In a situation where this is likely, it may make sense to set a retention policy on deleted blobs. This will grant a period of time after something has been deleted when you will be able to restore a deleted blob.

Python

import os

from azure.storage.blob import BlobServiceClient

from azure.storage.blob import RetentionPolicy

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Set the retention policy for the service

blob_service_client.set_service_properties(delete_retention_policy=RetentionPolicy(enabled=True,days=5))

# Create a new container

container_client = blob_service_client.create_container('retentiontest')

# Initialize a blob client

blob_client = blob_service_client.get_blob_client(

container='retentiontest', # container to write to

blob='polygon.png' # name of blob

)

# Write file to blob

with open('./data/polygon.png','rb') as f:

blob_client.upload_blob(f)

# Delete blob

blob_client.delete_blob()

# Undelete blob

blob_client.undelete_blob()

How do I lease a blob?

A lease on a blob ensures that no one other than the leaseholder can modify or delete that object. Leases can be created for a set or infinite duration. The example below will initialize an infinite duration lease on the blob and lock other users from modifying it.

Python

import os

from azure.storage.blob import BlobServiceClient

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Initialize a container from its name

container_client = blob_service_client.get_container_client('retentiontest')

# Get the blob client

blob_client = container_client.get_blob_client('polygon.png')

# Initialize a lease on the blob

lease = blob_client.acquire_lease()To test this, we can view its properties in Storage Explorer.

How do I break a lease on a blob?

Python

To break a lease in Python, you need to import the BlobLeaseClient object, initialize it with the blob client, and then call its 'break_lease' method.

If you have the initial lease object that you created as a result of the above code in memory, you can call the lease object's 'break_lease' method instead.

import os

from azure.storage.blob import BlobServiceClient

from azure.storage.blob import BlobLeaseClient

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Initialize a container from its name

container_client = blob_service_client.get_container_client('retentiontest')

# Get the blob client

blob_client = container_client.get_blob_client('polygon.png')

# Break the lease

BlobLeaseClient(blob_client).break_lease()

How do I view and set a container access policy?

Python

This will create an access policy for the container, update the container with the policy (including Public Access), and print off the new policy parameters.

import os

from azure.storage.blob import BlobServiceClient

from azure.storage.blob import PublicAccess,AccessPolicy,ContainerSasPermissions

conn_str = os.getenv('az_storage_conn_str')

# Initialize a BlobServiceClient object

blob_service_client = BlobServiceClient.from_connection_string(conn_str)

# Initialize a container from its name

container_client = blob_service_client.get_container_client('retentiontest')

# Set Access Policy

access_policy = AccessPolicy(permission=ContainerSasPermissions(read=True, write=True))

identifiers = {'read': access_policy}

container_client.set_container_access_policy(

signed_identifiers=identifiers,

public_access = PublicAccess.Container

)

# Print container access policy

print(container_client.get_container_access_policy())We can also check the Storage Explorer to verify the changes.

Check out some of our other database cheat sheets:

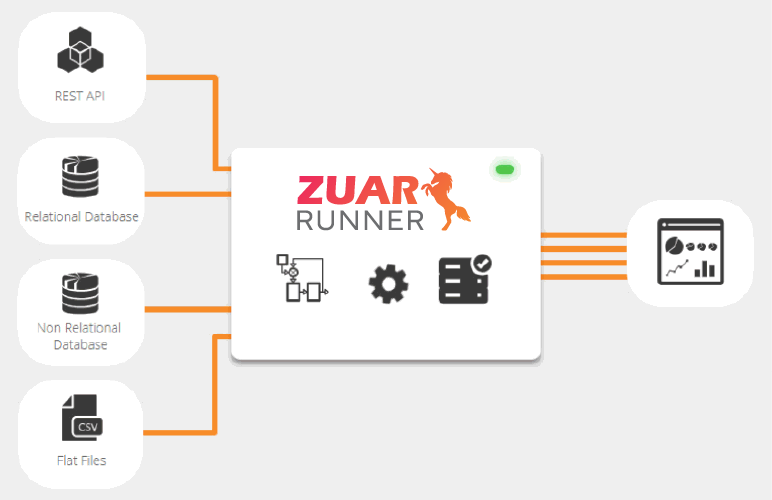

How do I transfer data from Azure Blob Storage to a database?

Zuar provides an ELT solution named Runner that pulls data from Azure Blob Storage (and many other sources), normalizes it (making the data database and analysis-ready), and stores it in the database or data lake of your choice.